OK, I often have to explain to people what I do and in most cases I get an enquiring and mystified look! What is Speech Recognition, let alone VUI Design! So I guess I have to go back to basics for a bit and explain what Speech Recognition is and what speech recognition applications involve.

What is Speech Recognition then?

Speech Recognition is the conversion of speech to text. The words that you speak are turned into a written representation of those words for the computer to process further (figure out what you want in order to decide what to do or say next). This is not an exact science because – even among us humans – speech recognition is difficult and is fraught with misunderstandings or incomplete understanding. How many times have you had to repeat your name to someone (both in person and on the phone)? How many times have you had someone cracking up with laughter, because they thought you said something different to what you actually said? These are examples of human speech recognition failing magnificently! So it is no wonder that machines do it even less well. It’s all guesswork really.

In the case of machine speech recognition, the machine will have a kind of lexicon into its disposal with possible words in the corresponding language (English, French, German etc.) and their phonetic representation. This phonetic representation describes the ways that people are most likely to pronounce this specific word (think of Queen’s English or Hochdeutsch for German, at this point). Now if you bring regional accents and foreigners speaking the language into the equation, things get even more complicated. The very same letter combinations or whole words are pronounced completely differently depending on whether you are from London, Liverpool, Newcastle, Edinburgh, Dublin, Sydney, New York, or New Orleans. Likewise, the very same English letter combinations and words will sound even more different when spoken by a Greek, a German or a Japanese person. In order to deal with those cases, speech recognition lexica are augmented with additional “pronunciations” for each problematic word. So the machine can hear 3 different versions of the same word spoken by different people and still recognise it as one and the same word. Sorted! Of course you don’t need to go into all this trouble for every possible word or phrase in the language you are covering with your speech application. You only need to go to such lengths for words and phrases that are relevant to your specific application (and domain), as well as for accents that are representative of your end-user population. If an app is going to be used mainly in England, you are better off covering Punjabi and Chinese pronunciations of your English app words rather than Japanese or German variants. There will of course be Japanese and German users of your system, but they represent a much smaller percentage of your user population and we can’t have everything!!

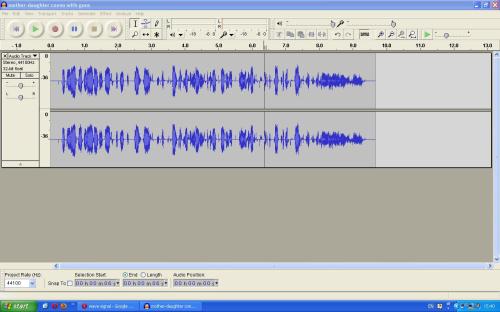

Speech recognition may be based on text representations of words and their phonetic “translation” (pronunciations) but the whole process is actually statistical. What you say to the system will be processed by the system as a wave signal like this one here:

So the machine will have to figure out what you’re saying by chopping this signal up into parts, each representing a word that makes sense in the context of the surrounding words. Unfortunately the same signal can potentially be chopped up in several different ways, each representing a different string of words and of course a different meaning! There’s a famous example of the following ambiguous string:

The same speech signal can be heard as “How to wreck a nice beach” or .. “How to recognise speech“!!! They sound very similar actually!! (Taken from FNLP 2010: Lecture 1) So you can see the types of problems that us humans, let alone a machine, are faced with when trying to recognise each other!

Speech Recognition Techniques

The approach to speech recognition described above, which uses hand-crafted lexica, is the standard “manual” approach. This is effective and sufficient for applications that represent very limited domains, e.g. ordering a printer or getting your account balance. The lexica and the corresponding manual “grammars” can describe most relevant phrases that are likely to be spoken by the user population. Any other phrases will be just irrelevant one-offs that can be ignored without negatively affecting the performance of the system.

For anything more complex and advanced, there is the “statistical” approach. This involves the collection of large amounts of real-world speech data, preferably in your application domain: medical data for medical apps, online shopping data for a catalogue ordering app etc. The statistical recogniser will be run over this data multiple times resulting in statistical representations of the most likely and meaningful combinations of sounds in the specific human language (English, German, French, Urdu etc.). This type of speech recogniser is much more robust and accurate than a “symbolic” recogniser (which uses the manual approach), because it can accurately predict sound and word combinations that could not have been pre-programmed in a hand-crafted grammar. Thus statistical recognisers have got much better coverage of what people actually say (rather than what the programmer or linguist thinks that people say). Sadly, most speech apps (the Interactive Voice Response systems or IVRs, for instance, used in Call Centre automation) are based on the manual symbolic approach rather than the fancy statistical one, because the latter requires considerable amounts of data and this data is not readily available (especially for a new app that has never existed before). A lot of time would need to be spent recording relevant human-2-human conversations and even more time to analyse it in a useful manner. Even when data is available, things such as cost and privacy protection get in the way of either acquiring it or putting it into use.

Speech Recognition Applications

By now you should have realised how complex speech recognition is at the best of times, let alone how difficult it is to recognise people with different regional accents, linguistic backgrounds, and .. even moods or health conditions! (more on that later) Now let’s look at the different types of speech recognition applications. First of all, we should distinguish between speaker-dependent and speaker-independent apps.

Speaker-dependent applications involve the automatic speech recognition of a single person / speaker. It could be your dictation system that you’ve installed on your PC to take notes down, or start writing emails and letters. It could be your hand-held dictation system that you carry around as a doctor or a lawyer, composing a medical report on your patients or talking to your clients, walking up and down the room. It could even be your standard mobile phone or smartphone / iphone / Android that you use to call (voice dial) one of your saved contacts, search through your music library for a track with a simple voice command (or two), or even to tweet. All these are speaker-dependent applications in that the corresponding recogniser has been trained to work with your voice and your voice only. You may have trained it in as little as 5 minutes of speaking to it or longer / shorter in other cases, but it will work sufficiently well with your voice, even if you’ve got a cold (and therefore a hoarse voice) or you’re feeling low (and are therefore more quiet than usual). Give it to your mate or colleague though and it will break down, or misrecognise you in some way. The same recogniser will have to be retrained with any other speaker in order to work.

Enter speaker-independent speech recognition systems! They have been trained on huge amounts of real-world data with thousands of speakers of all kinds of different linguistic, ethnic, regional, or educational backgrounds. As a result, those systems can recognise anyone, both you and your mate and even all your colleagues or anyone else you are likely to meet in the future. They are not tied to the way you pronounce things, your physiology or your voiceprint; they have been developed to work with any human (or indeed machine pretending to be a human, come to think of it!). So when you buy off-the-shelf speech recognition software, it’s going to work immediately with any speaker, even if badly in some cases. You can later customise it to work for your specific app world and for your target user population, usually with some external help (Enter Professional Services providers.). Speaker-independent applications can work on any phone (mobile or landline) and are used mainly to (partly) automate Call Centres and Helplines, e.g. speech and DTMF IVRs for online shopping, telephone banking or e-Government apps. OK, speech recognition on a mobile can be tricky as the signal may not be good, i.e. intermittent, the line could be crackling, and of course there is the additional problem of background noise, since you are most likely to use it out in the busy streets or some kind of loud environment. Speaker-independent recognition is also used to create voice portals, i.e. speech-enabled versions of websites for greater accessibility and usability (think of disabled Web users). Moreover, a speaker-independent recogniser is also used for voicemail transcription, that is when you get all the voicemails you have received on your phone transcribed automatically and sent to you as text messages, for instant and – importantly – discrete accessibility. They are B2B applications, which means that the solution is sold to a company (a Call Centre, a Bank, a Government organisation). In contrast, speaker-dependent apps are sold to an individual, so they are B2C apps, they are sold directly to the end customer.

Because speaker-independent apps have to work with any speaker calling from any device or channel (even the web, think of Skype), the corresponding speech recogniser is usually stored on a server or cloud somewhere. Speaker-dependent apps on the other hand are stored locally on your personal PC, laptop, Mac, mobile phone or handheld.

And to clear any potential confusion beforehand, when you ring up from your mobile an automated Call Centre IVR (for instance to pay a utilities bill), you are using a speech recogniser stored at that Call Centre’s, the company’s, reseller’s or solution provider’s server rooms. So in that case, although you are using your unique voice on your personal mobile phone, the recogniser does not reside on it. The same holds for voicemail transcription, curiously! Although you are using your unique voiceprint on your personal phone to leave a voicemail on your mate’s phone, the speech recogniser used for the automatic transcription of your mate’s voicemail will be residing on some secret server somewhere, perhaps at the Headquarters of their mobile provider or whoever is charging your mate for this handy service. In contrast, when you use a dictation / voice-to-text app on your smartphone to voice dial one of your contacts, your personal voiceprint, created during training and stored on the device, is used for the speech recognition process. So recognition is a built-in feature. Nowadays there is, however, a third case: if you are using your smartphone to search for an Indian restaurant on Google Maps, the recogniser actually resides in the cloud, on Google servers, rather than on the device. So there are increasingly more permutations of system configurations now!

There are many off-the-shelf speech recognition software packages out there. Nuance is one of the biggest technology providers for both speaker-independent and speaker-dependent / dictation apps. Other automatic speech recognition (ASR) software companies are Loquendo, Telisma, and LumenVox. Companies specialising in speaker-dependent / dictation systems are Philips, Grundig and Olympus, among others. However Microsoft has also long been active in Speech processing and lately Google has also been catching up very fast.

The sky is the limit, as the saying goes!

Leave a comment